Wheatstone BLADEFEST

Enhancing System Performance

Wheatstone's WheatNet-IP Engineers got together to try and break a huge system assembled to be representative of all our control surfaces, many, many BLADES and processors, as they'd be used in a very large installation. In the process, they made the products faster, better, and stronger. We called it BLADEFEST. And the engineers who took part were our BLADE RUNNERS...

The video below documents the process. The article below (expanded here) appeared in the Jan/Feb 2015 edition of Radio Guide Magazine.

It’s A BLADEFEST

By Dee McVicker

When was the last time a bunch of guys got together for the purpose of breaking something?

I can tell you.

It was in the fall, when the Wheatstone crew of engineers and thrill seekers strung together 52 BLADEs, half a dozen digital audio consoles, Talent Stations, automation systems, control panels of all sorts, SideBoards, audio codecs, audio processors – AirAura X3, FM-55, FM-531, VP-8, M1, M2, M4, Aura8-IP -- and the complete family of Wheatstone software applications into a large WheatNet-IP audio network for that very purpose.

We called it BLADEFEST.

Our intention was to test for interoperability of all these things with our new BLADE-3 I/O hardware and software, plus qualify the system behavior under various power and network failure scenarios and Ethernet switch versions.

Our intention was to test for interoperability of all these things with our new BLADE-3 I/O hardware and software, plus qualify the system behavior under various power and network failure scenarios and Ethernet switch versions.

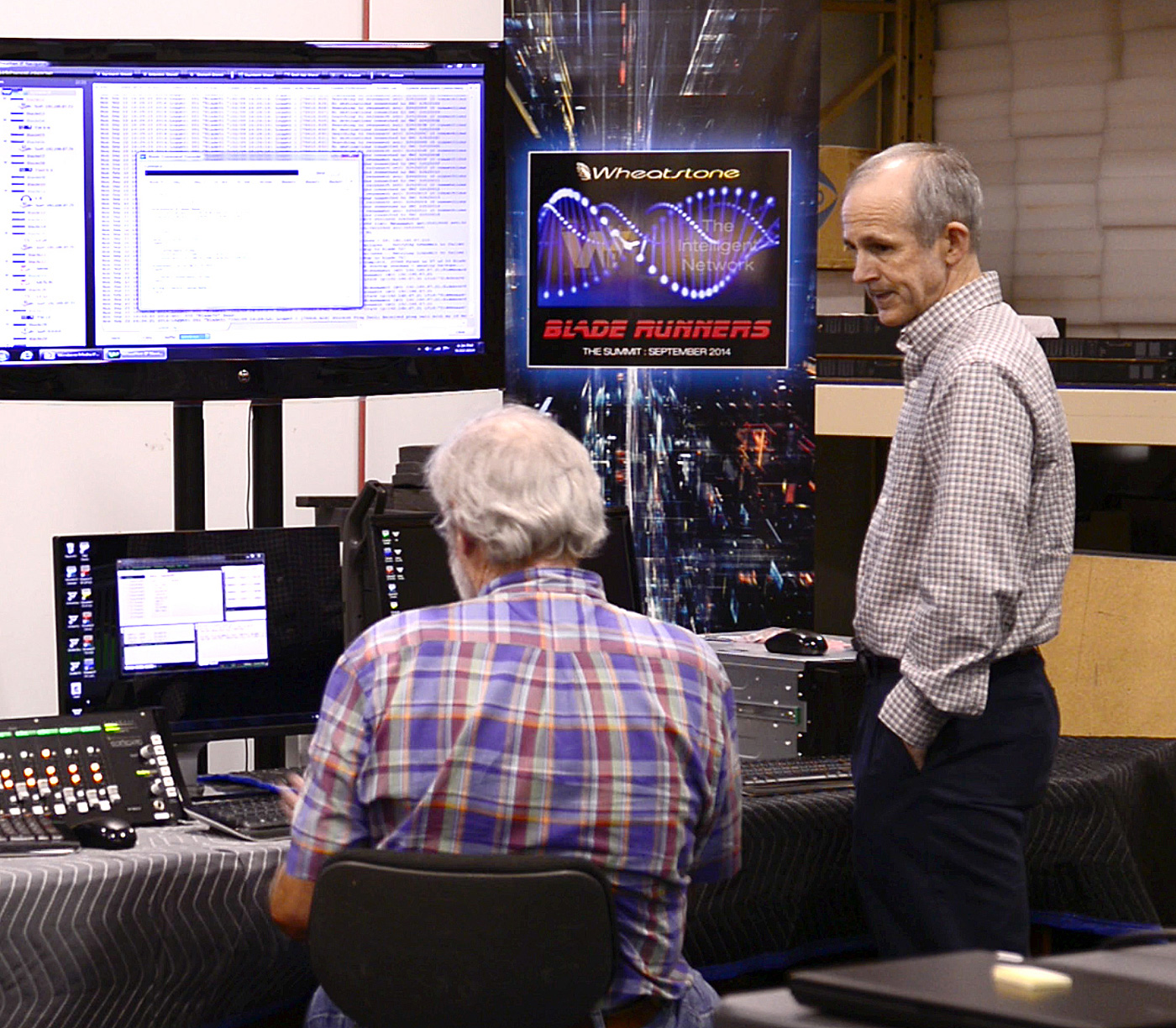

Altogether, we gathered up more than a third-of-a-million dollars worth of WheatNet-IP gear – a large assembly of Wheatstone studio equipment representative of what we've installed in major market facilities. The whole of it lined up into a U shape with a table down the middle, ironically forming a huge “W” in the middle of our production plant in New Bern, NC.

And the “BLADE RUNNERS" for the weeklong adventure? Officially, our fearless engineering manager Andy Calvanese, ace field engineer Kelly Parker, software engineer Scott Gerenser and hardware engineer Dave Breithaupt made up the core team with additional support from our project engineers as needed. Unofficially, though, you’d have to include just about every one else in the factory who stopped in on their lunch break or whenever they could find an excuse to see what was happening.

The idea behind BLADEFEST was to subject the WheatNet-IP system in real time to the toughest studio demands possible, with bonus points given to anyone who could break it.

So, of course, they pushed buttons, ran ridiculously complex software routines and power outage tests and stressed the system to the max, at one point running 462 channels of audio between two points over a single gigabit network cable. They had more than 2,500 audio sources to play with and two Cisco core switches trunked together, plus a dozen Cisco edge switches. Audio from the head of the chain could be looped back and forth through all the BLADEs (because each one has a built in router), all the control surfaces, and all the audio processors, and come out the other end having passed through each audio device in the entire system. If there was a failure, our BLADE RUNNERS could see immediately where the audio trail ended on a “wall of meters” on a PC screen that monitored every BLADE, console and processor in the system. To monitor all 80-plus items in the network, they had to replace a run-of-the-mill PC for one with a beefier graphics card.

The BLADE RUNNERS were able to get really creative with our Screen Builder custom application. As they would think up new devious ways to break the system, our software programmers and scripters would write code to test their theories.

Getting Along

Getting Along

We were most curious about how our new third-generation BLADE-3 I/O access units would interact with the network software and coexist with our second-generation BLADEs. We had already tested them singly and grouped together in smaller networks, but not until now had we been able to put them together in one large system to see how they reacted to one another. At the same time, we were also testing out new Cisco switches to make sure we could advise our clients correctly when they asked about switch choices.

The BLADE RUNNERS discovered immediately that they could tune a few adjustments to the setup software in order to get them all to play together more efficiently. Like its BLADE-2 counterparts, BLADE-3s “elect” a master in the network that all the others slave to based on uptime, version, and user preferences. Usually these are civil elections, but as our BLADE RUNNERS soon discovered, the process needed optimization during an initial system boot-up with a cold start on the switches. Because the BLADEs boot up way faster than the Ethernet switches themselves, a 52 BLADE system first becomes 52 single BLADE systems while waiting for the switches to boot before it can resolve to the single system it is supposed to be. A software change corrected the tuning, and our customers will be glad to know they can expect the same kind of easy setup with new BLADE-3s as they’ve gotten used to with our BLADE-2s (just unbox the units, press a button, choose an ID, and all the IP addresses and system setup routines are done automatically -- unless of course you want to do all this grunt work manually).

Testing One, Two, Three

Testing One, Two, Three

Once our BLADE RUNNERS got the system up and running, they spent the next three days trying to bring it to its knees and testing recovery times in the process. We wanted to know how fast the system could recover from losing a switch or from losing power, for example.

We found out: Less than a minute-and-a-half (yes, you read that correctly) to bring all 80-plus elements in the network back online. That’s 52 BLADEs and six consoles, among other pieces, cold started and back up and running all the audio streams in a minute and a half. This is way faster than the actual time it takes the switches themselves to boot up (the core switches take 5 to 10 minutes, depending on model), proving once again the value of UPS and fail safe power supplies for these important pieces of an AoIP system.

Stressing Out

The BLADE RUNNERS performed many stress tests and repeated them over and over until they were sure of the results. Some of these were:

Cold start test --- everything powered on simultaneously to simulate a system recovery after a major power outage when UPS or backup power had not been deployed.

Reboot test --- switches all left “on” but all BLADEs repowered simultaneously to show how the system performs after a software update and subsequent reboot.

Fracture test --- disconnecting various Ethernet links between switches so the system fractures into several smaller systems, and then connecting the links again showing how the system recovers from broken trunk links or a switch failure.

Splinter test --- all switches are off and each BLADE becomes a standalone system and then the subsequent recovery as the switches are turned back on.

As to be expected, our BLADE RUNNERS were especially preoccupied with jamming as many megabits of audio down the system as possible. Our BLADEFEST quickly turned into an episode of Myth Busters at one point, with the engineers re-cabling the system so that one half of the BLADEs were on one side of the room and the other half on the other side. Each side was connected to Cisco core switches, which were then connected together over a single Gigabit Ethernet link.

The BLADE RUNNERS then started routing audio through the link it to see how many signals they could push through from one side to the other before it started to break down. Once a stream had been sent across the link they would send it back in the other direction and bring it in to the next port and send it back across the link. In this manner they could zig-zag the same audio many times through the link. Because each BLADE has a built-in headphone output with system-wide routing control, they could listen to the audio at any point in the chain and could, in fact, monitor it at the end after it had zigged and zagged hundreds of times across the link. In this way, they could watch for audio drop out or distortion or cascading jitter problems and so forth.

The BLADE RUNNERS then started routing audio through the link it to see how many signals they could push through from one side to the other before it started to break down. Once a stream had been sent across the link they would send it back in the other direction and bring it in to the next port and send it back across the link. In this manner they could zig-zag the same audio many times through the link. Because each BLADE has a built-in headphone output with system-wide routing control, they could listen to the audio at any point in the chain and could, in fact, monitor it at the end after it had zigged and zagged hundreds of times across the link. In this way, they could watch for audio drop out or distortion or cascading jitter problems and so forth.

The system was happy and the link held up well as they approached the gigabit data rate. In fact, the system still held together as they taxed the switch fabric and began to see audio problems. The issues they first saw were occasional random clicks in one or another of the hundreds of audio streams they were monitoring as the audio buffers ran out before the switch could get the next packet onto the needed port. They'd only hear the clicks if the audio was a tone, but they quickly discovered how close to the cliff they were as adding a few more streams quickly made all the audio channels break up as the switch/link ran out of steam. Up to the breaking point, the audio was as clean and steady as if it were the only channel on the cable. And, more importantly, there was no break up of system integrity; apparently enough control packets were making it through to keep the system holding together.

Overall, our BLADE RUNNERS learned in fact what we had known all along in theory. The WheatNet-IP audio network can transport the upper limit of Ethernet traffic. Until then, there’s virtually no packet loss through the system; with the gigabit link, 462 channels of 24-bit uncompressed audio were streaming across the one cable in one direction with a few hundred more going back the other way.

They were also able to duplicate, and fix, an annoying issue that been taunting Kelly Parker in the field for weeks. Under certain conditions, the L-8 control surface – our newest console – kept flipping faders on certain audio sources to off during system recovery. It wasn’t the kind of problem to affect studio operations, but it was annoying enough that it needed to be addressed. The problem turned out to be a minor software bug that was fixed on the spot.

They were also able to duplicate, and fix, an annoying issue that been taunting Kelly Parker in the field for weeks. Under certain conditions, the L-8 control surface – our newest console – kept flipping faders on certain audio sources to off during system recovery. It wasn’t the kind of problem to affect studio operations, but it was annoying enough that it needed to be addressed. The problem turned out to be a minor software bug that was fixed on the spot.

There was also another persistent problem that Scott Gerenser had been chasing in the field for some time. Occasionally, when he tried to dial in a source on the E-6 control surface, after turning the knob in a certain sequence, it would scroll forward a distance and then skip a source on its own accord. This happened only in very specific instances involving large numbers of sources — and no one had been able to reliably reproduce it — until BLADEFEST. The BLADE RUNNERS were able to replicate the fault and fix the glitch on the spot, proving once again the value of real world testing in a representative environment..

Incredibly, even after stress testing more than 80 pieces of Wheatstone gear, the engineers found only one faulty BLADE – it had a problem with the onboard flash memory chip which became apparent as they loaded audio clips onto the built-in clip player.

BLADEFEST was a great opportunity to test and demonstrate the capability and capacity of the WheatNet-IP system, check out the new functionality in our version 3 BLADEs, and remind ourselves once again just how cool it is to have one interoperable system with all these different components (mixers, automation, controllers, codecs, processors, and software applications) working seamlessly together over IP.

BIO: Dee McVicker has been following changes in broadcasting for more than 20 years, more recently as a part of Wheatstone's marketing team. This is her first BLADEFEST.